Apache Kafka

What it is, how it works, benefits & challenges. This guide provides definitions and practical advice to help you better understand Apache Kafka.

APACHE KAFKA GUIDE

What is Apache Kafka?

Apache Kafka is an open-source distributed event streaming platform which is optimized for ingesting and transforming real-time streaming data. By combining messaging, storage, and stream processing, it allows you to store and analyze historical and real-time data.

Why Kafka Matters

The platform is typically used to build real-time streaming data pipelines that support streaming analytics and mission-critical use cases with guaranteed ordering, no message loss, and exactly-once processing.

Apache Kafka is massively scalable because it allows data to be distributed across multiple servers, and it’s extremely fast because it decouples data streams, which results in low latency. It can also distribute and replicate partitions across many servers, which protects against server failure.

How Apache Kafka Works

At a high level, Apache Kafka allows you to publish and subscribe to streams of records, store these streams in the order they were created, and process these streams in real time.

This 2-minute video shows how Apache Kafka works, how it has evolved, and its importance in supporting your streaming data needs.

Now let’s dig a bit deeper.

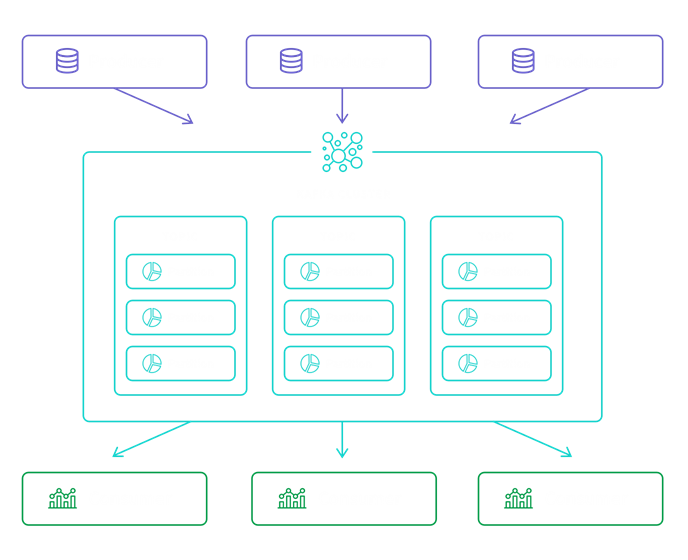

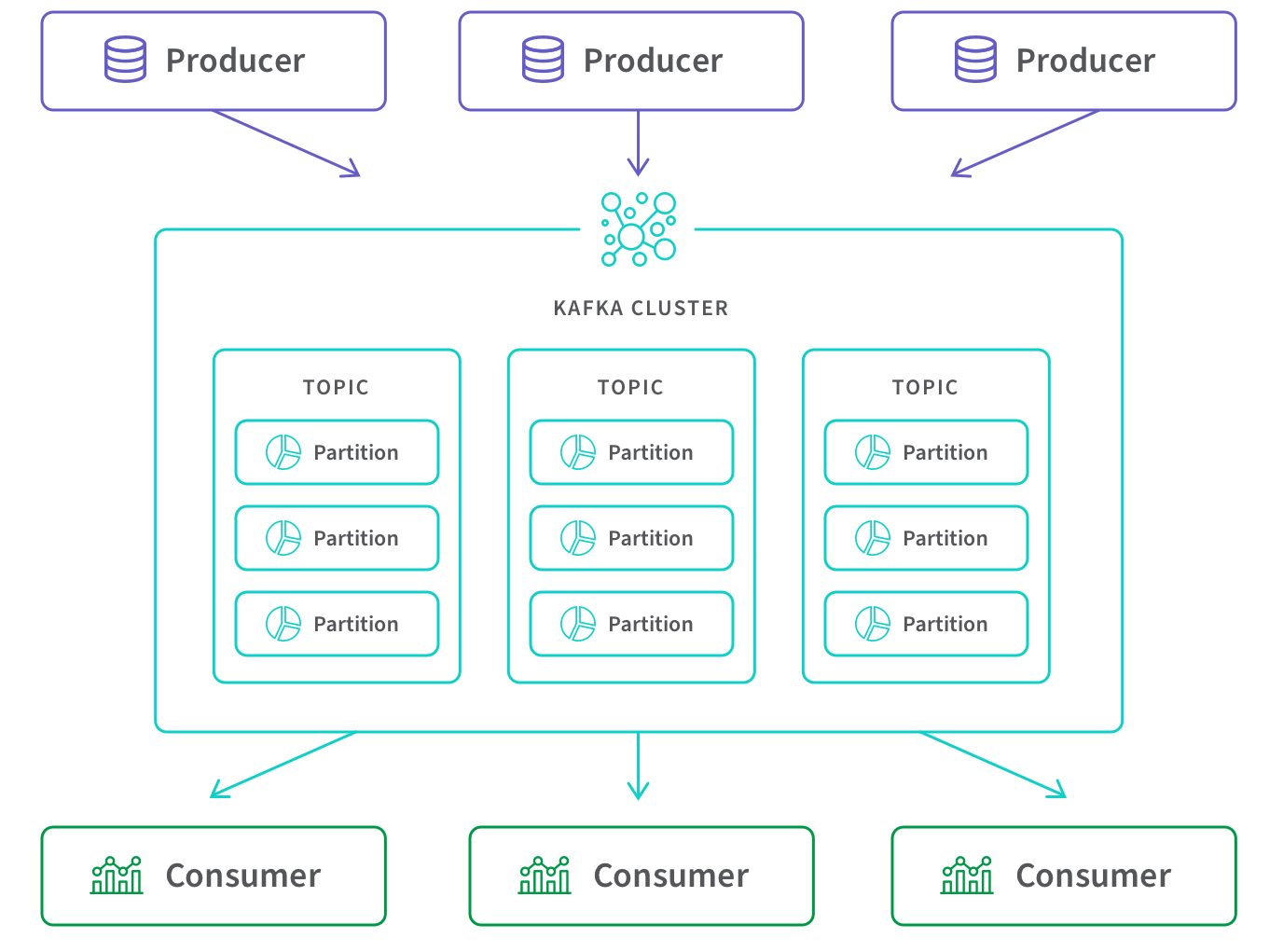

Running on a horizontally scalable cluster of commodity servers, Apache Kafka ingests real-time data from multiple "producer" systems and applications—such as logging systems, monitoring systems, sensors, and IoT applications—and at very low latency makes the data available to multiple "consumer" systems and applications such as real-time analytics.

Consumers can range from analytics platforms to applications that rely on real-time data processing. Examples include logistics or location-based micromarketing applications.

Here we define the terms shown above:

Producer: Client application that push events into topics

Cluster: One or more servers (called brokers) running Apache Kafka.

Topic: The method to categorize and durably store events. There are two types of topics: compacted and regular. Records in compacted topics do not expire based on time or space bounds. Newer topic messages update older messages that possess the same key and Apache Kafka does not delete the latest message unless deleted by the user. For regular topics, records can be configured to expire, deleting old data to free storage space.

Partition: The mechanism to distribute data across multiple storage servers (brokers). Messages are indexed and stored together with a timestamp and ordered by the position of the message within a partition. Partitions are distributed across a node cluster and are replicated to multiple servers to ensure that Apache Kafka delivers message streams in a fault-tolerant manner.

Consumers: Client applications which read and process the events from partitions. The Apache Kafka Streams API allows writing Java applications which pull data from Topics and write results back to Apache Kafka. External stream processing systems such as Apache Spark, Apache Apex, Apache Flink, Apache NiFi and Apache Storm can also be applied to these message streams.

Convert Production Databases Into Live Data Streams

Apache Kafka Benefits

Apache Kafka’s message broker system can sequentially and incrementally process a massive inflow of continuous data streams that are simultaneously produced by thousands of data sources with high throughput and durability. The data integration benefits are:

Scalability

By dividing a topic into multiple partitions, Apache Kafka provides load balancing over a pool of servers. This allows you to scale production clusters up or down to fit your needs and to spread clusters across geographic regions or availability zones.

Speed

By decoupling data streams, Apache Kafka is able to deliver messages at network limited throughput using a cluster of servers with extremely low latency (as low as 2ms).

Durability

Apache Kafka makes the data highly fault-tolerant and durable in two main ways. First, it protects against server failure by distributing storage of data streams in a fault-tolerant cluster. Second, it provides intra-cluster replication because it persists the messages to disk.

Apache Kafka Challenges

While Apache Kafka can be a powerful addition to enterprise data management infrastructures, it also poses some challenges. Two key challenges are as follows:

1) The need for IT teams to work with yet another set of APIs. There are five main types of APIs:

- Admin APIs allow for managing brokers, topics, and other objects.

- Producer APIs allow applications to publish streams of records to a topic.

- Consumer APIs allow applications to subscribe to topics and to process their streams of records.

- Connector APIs automate the addition of applications or data systems to existing topics.

- Streams APIs allow apps to act as stream processors, converting input streams to output and then producing the result in a different output topic(s).

2) Degrading the performance of source systems. Pulling real-time data from diverse source systems can degrade the performance of those systems if not implemented correctly. Many organizations find that coupling Qlik Replicate® to Apache Kafka helps negate source performance issues and accelerates data streaming from a wide variety of heterogeneous databases, data warehouses, and big data platforms.

Accelerate analytics-ready data and insights with DataOps

-

Real-Time Data Streaming (CDC)

Extend enterprise data into live streams to enable modern analytics and microservices with a simple, real-time and universal solution. -

Agile Data Warehouse Automation

Quickly design, build, deploy and manage purpose-built cloud data warehouses without manual coding. -

Managed Data Lake Creation

Automate complex ingestion and transformation processes to provide continuously updated and analytics-ready data lakes.