Real-Time Analytics

What it is, how it works, challenges, and examples. This guide provides practical advice to help you understand and manage real-time data analytics.

Real-Time Analytics Guide

What is Real-Time Analytics?

Real-time analytics refers to the use of tools and processes to analyze and respond to real-time information about your customers, products, and applications as it is generated. This gives you in-the-moment insights into your business and customer activity and lets you quickly react to changing conditions.

Gartner defines two types of real-time analytics: “on-demand”, where the system provides analytic results only when a user or application submits a query, and “continuous”, where the system proactively alerts users or triggers responses in other applications as the data is generated.

Benefits

Real-time analytics is broadly applied in nearly every industry. This is due to the rapid pace of business today, customer expectations for immediate response and personalized recommendations, and the continuing growth of real-time applications, big data, and the Internet of Things (IoT).

The primary overall benefit of real-time analytics is speed. It helps you grow your business by responding to market events faster than your competitors. And it saves you money by identifying issues such as fraud or production problems before they escalate.

Here are the key benefits of real-time data analytics in more detail:

Improve competitiveness and customer satisfaction. Real-time reporting and analytics tools can automatically develop reports, alarms and other actions in response to real-time data. For example, you can have interactive visualizations deliver you real-time insights or you can set rules for you to be notified by text if key performance indicators reach certain levels. You can also have these tools analyze your real-time data, often using machine learning algorithms, and provide in-the-moment insights. This kind of active intelligence helps you respond faster than your competitors to market events and customer issues.

- Respond to customers faster with in-the-moment awareness of their behavior and needs.

- Develop and refine new products faster by getting real time insights on how your users are interacting with your products.

- Optimize campaigns, web sites and apps faster by delivering personalized offers and tests in real time.

Reduce Fraud and Other Losses. Real-time data analytics makes you aware of time-sensitive issues that can result in significant losses, such as inventory outages, cybercrimes, fraud, technology failures, security breaches, and production issues. You can then quickly respond, and perhaps prevent, issues before they grow.

Be more efficient. A real-time analytics platform can also improve efficiency across your organization. For example, people analytics will have up to date information on employee engagement, the IT department can focus on other priorities as the volume of custom queries decreases, and executives can make decisions much faster when they don’t need to wait for data to be updated. The best real-time analytics tools enable predictive analytics to guide your decision making and prescriptive analytics to automatically recommend the optimal course of action.

How Real-Time Analytics Works

Real-time data analytics combines historical data with streaming data to deliver automated metrics and insights within dashboards or embed them directly into machine-driven processes.

To support your real-time analytics platform, you’ll need a broader cloud architecture and a robust streaming data pipeline to consume, store, enrich, and analyze this flowing data in real time as it is generated.

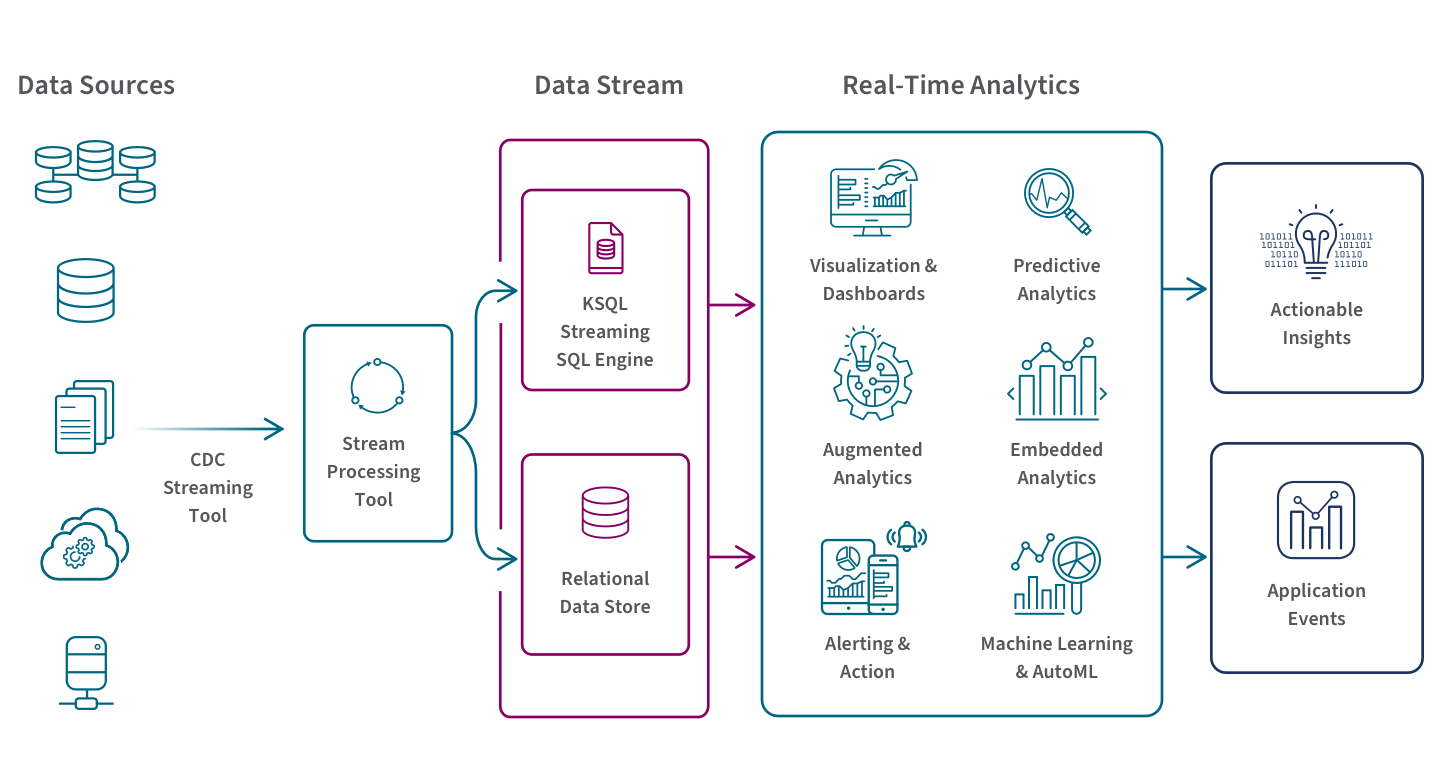

Real-Time Analytics Architecture:

Let’s walk through the above diagram:

1. Aggregate your data sources. Use a CDC streaming tool from your transactional systems or your relational databases to connect these sources to a stream processor. Common real-time data sources include IoT/sensors, app activity, server logs, online advertising, and clickstream data.

2. Build a stream processor. You can use a tool such as Amazon Kinesis or Apache Kafka to process your streaming data sequentially and incrementally on a record-by-record basis. Your stream processor should be scalabile, fast, fault tolerant, and integrated with downstream applications for presentation or triggered actions.

3. Query or store your streaming data. Use a tool such as Snowflake, Google BigQuery, Dataflow, or Amazon Kinesis Data Analytics to filter aggregate, correlate, and sample your data. You can query the data stream itself as it’s streaming using ksqlDB, a streaming SQL engine for Apache Kafka. You can also store your streamed data in the cloud and query it later. For storage, you can use a database or data warehouse such as Amazon Redshift, Amazon S3, and Google Storage.

4. Perform analytics. Use a modern real-time analytics tool to conduct analysis, data science, and machine learning or AutoML. These tools can also trigger alerts and events in other applications. Here are some specific use cases:

- Interactive data dashboards and visualizations that deliver alerts and help you uncover insights in real time enable you to quickly respond to changes in KPIs.

- Triggered events in other applications such as ad buying software that automatically processes bids and purchases online advertising based on predefined rules.

- Algorithms applied in-stream, which means data scientists and AutoML models don’t have to wait for data to reside in a database.

- Real-time predictive analytics that predicts outcomes and prescriptive analytics that suggests a course of action based on up-to-the-minute data.

Real Time vs Batch

Traditional batch analytics is passive in that it relies on preconfigured, historical data sets. It supports business intelligence reporting where lagging KPIs track what has already happened. It wasn’t designed to support real-time decisions and actions. Reporting and analytics in real time delivers a state of continuous insights from instantly up-to-date information designed to trigger immediate actions.

Still, you may choose to use both a batch layer and a real time layer to cover the spectrum of your data analytics needs. Here’s a side-by-side look at real time vs. batch analytics, and how they can work in tandem to give you a complete solution.

| Real Time | Batch | |

|---|---|---|

| Data Analysis | Deep analysis of dynamic, time-sensitive data. | Deep analysis of static, historical data. |

| Query Scope | Only the most recent data record (or within a rolling time window). | Queries the entire dataset. |

| Data Ingestion | A continual sequence of individual records. | Batches of large data sets. |

| Processing | Processes only the most recent data record or within a rolling time window. Native support for semi-structured data reduces or removes the need for ETL. | Processes the entire dataset and can put up with ETL pipelines taking the time to transform the data. |

| Latency | Low latency: data is available for querying in milliseconds or seconds. | High latency: data for queries is minutes to hours old. |

Challenges

Real-time analytics can help you gain a competitive advantage but it’s a relatively new capability and there are a few key challenges you should be aware of as you develop your real-time analytics architecture.

High availability and low response times. Your data flows continuously, at high velocity, and at high volume. It can also be heterogeneous, volatile, and incomplete. So, in managing your data stream, here are the key challenges for your real-time analytics system:

- Scalability. Your real-time data sources will likely be producing terabytes of big data and this volume can spike quickly and vary over time. This is why it’s a good idea to store your data in a cloud data warehouse.

- Durability and consistency. You should have data governance and data lineage processes in place because the data read could already be modified and stale in another data system.

- Fault Tolerance. Your system needs to manage data flows in a variety of formats from many sources while preventing disruptions from a single point of failure.

- Ordering. To resolve data discrepancies and support applications you need to know the sequence of data in the data stream.

Cost. You’ll need to invest in a new set of tools that can perform instant analysis on continually flowing data. However, the many benefits of establishing this real-time analytics architecture make for a very positive ROI over the long term.

Internal buy-in. You’ll also need to clearly define what you mean by real time, which data sources to include, which internal processes should be adjusted, and what training various teams will need.

Examples of Real-Time Analytics

Real-time big data analytics is applied in many ways in nearly every industry today. Here are the four main use case categories:

Real-time personalization. Real-time analytics can help you deliver online content and promotional offers based on each user’s profile and their actions in-the-moment. For example, an ecommerce store could serve personalized recommendations to a customer based not only on their historical shopping data but on what that customer just added to their cart.

Preemptive maintenance. Real-time analytics can help you monitor equipment and detect problems so you can schedule service or order new parts in advance. This preemptive approach reduces downtime and improves productivity.

Performance optimization. Real-time analytics can help you optimize resource allocation with in-the-moment adjustments to activities and processes. For example, when combined with supply chain analytics, a freight provider can eliminate inefficient shipping routes by using real-time data on traffic and delays.

Fraud and error detection. Real-time analytics can help you take immediate action to stop fraud and errors by comparing historical data with current information. For example, a financial services company could detect anomalies in transaction activity and put a hold on an account to prevent further loss.