Real-Time Data

What it is, how it works, challenges, and examples. This guide provides practical advice to help you understand and manage data in real time.

Real-Time Data Guide

What is Real-Time Data?

Real-time data refers to information that is made available for use as soon as it is generated. Ideally, the data is passed instantly between the source and the consuming application but bottlenecks in data infrastructure or bandwidth can create a lag. Real-time data is used in time sensitive applications such as stock trading or navigation and it powers real-time analytics, which brings in-the-moment insights and helps you quickly react to changing conditions.

Benefits

Real-time data is applied in nearly every industry today. This is because of the rapid pace of modern business, high customer expectations for immediate personalization and response, and the growth of real-time applications, big data, and the Internet of Things (IoT).

Here are the key benefits:

Make Faster, Better Decisions. Using a real-time analytics tool, you can have in-the-moment understanding of what’s happening in your business. This tool can automatically trigger alarms, develop dashboards and reports, and other actions in response to realtime data. These timely insights help you optimize your business faster than competitors. For example, your revenue operations team will be able to spot revenue risks before they progress.

Meet Customer Expectations. Customers today rely on applications that deliver time-sensitive data–such as weather, navigation, and ride-sharing apps–and they expect this level of instant and personalized service in all aspects of their life. Leveraging data in real time allows you to provide your customers the information they need instantly.

Reduce Fraud, Cybercrime, and Outages. Issues such as fraud, security breaches, production problems, and inventory outages can escalate quickly and result in significant losses for your organization. Realtime data lets you monitor every aspect of your business so that you can respond and prevent these issues before they become critical.

Reduce IT Infrastructure Expense. Working with data in real time allows you to better monitor and report on your IT systems and take a more proactive approach to troubleshooting servers, systems, and devices. Plus, realtime data is usually stored in lower volumes which results in lower storage and hardware costs.

Compare Top Data Streaming Platforms

Apache Kafka vs. Confluent Cloud vs. Amazon Kinesis vs. Microsoft Azure Event Hubs vs. Google Cloud Pub/Sub

Real-Time Data Processing

To realize the full potential of data in real-time, you’ll need a broad cloud architecture. To consume, store, enrich, and analyze data as it is generated, you can use stream processing systems like Apache Kafka and “off-the-shelf” data streaming capabilities from a number of cloud service companies. However, you may encounter issues when working with your legacy databases or systems. Thankfully, you can also build your own custom data stream using a robust ecosystem of tools, some of them open source.

Below we describe how real-time data processing works and the data streaming technologies you need for each of the four key steps. What’s remarkable is that this entire process takes place in milliseconds.

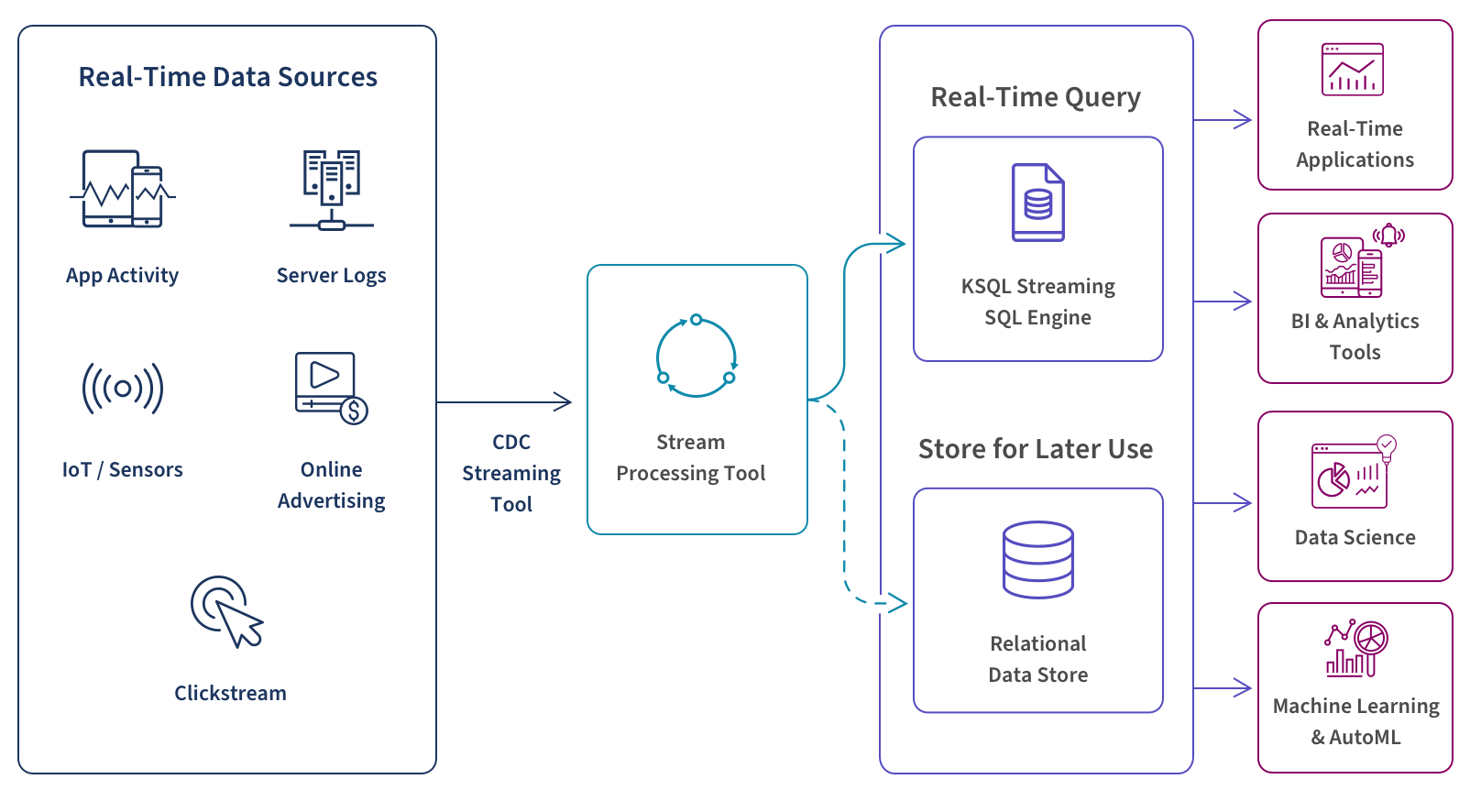

Real-Time Data Architecture:

1. Aggregate your data sources. Typical real-time data sources include IoT/sensors, server logs, app activity, online advertising, and clickstream data. Connect all these data sources from your transactional systems or your relational databases to a stream processor using a CDC streaming tool.

2. Implement a stream processor. Using a tool such as Amazon Kinesis or Apache Kafka you then process your streaming data on a record-by-record basis, sequentially and incrementally or over sliding time windows. To keep up with fast moving big data, your stream processor will need to be fast, scalable, and fault tolerant. You will also integrate it with downstream applications for presentation or triggered actions.

3. Perform real-time queries (or store your data). Now your infrastructure needs to filter, aggregate, correlate, and sample your data using a tool such as Google BigQuery, Snowflake, Dataflow, or Amazon Kinesis Data Analytics. You can query the realtime data stream itself as it’s streaming using a streaming SQL engine for Apache Kafka called ksqlDB. And, if you choose, you can also store this data in the cloud for future use. For storage, you can use a database or cloud data warehouse such as Amazon S3, Amazon Redshift, or Google Storage.

4. Support Use Cases. Now your real-time is ready to support whatever use case you have in mind. A real-time data analytics tool lets you conduct analysis, data science, and machine learning or AutoML without having to wait for data to reside in a database. These tools can also trigger alerts and events in other applications. Here are some specific use cases:

- Trigger events in other applications such as in ad buying software that buys online advertising based on predefined rules or in a content publishing system which makes personalized recommendations to users.

- Update data and calculations in time-sensitive apps such as stock trading, medical monitoring, navigation, and weather reporting.

- Produce interactive data dashboards and visualizations that deliver alerts and insights in real time.

Streaming Change Data Capture

Learn how to modernize your data and analytics environment with scalable, efficient and real-time data replication that does not impact production systems.

Real-Time vs Batch

Today's data environments are too fast-moving for traditional batch processing. Batch involves preconfigured, historical data sets which may support BI reporting but not real-time decisions and actions. However, you may choose to employ both a batch layer and a real-time layer to support the range of your data processing needs. Below is a side-by-side comparison:

| Real-Time | Batch | |

|---|---|---|

| Data Ingestion | Continual sequence of individual events. | Batches of large data sets. |

| Processing | Processes only the most recent data event. | Processes the entire dataset. |

| Analytics | Analysis of dynamic, time-sensitive data. | Analysis of static, historical data. |

| Query Scope | Only the most recent data record. | The entire dataset. |

| Latency | Low: data is available in milliseconds or seconds. | High: data is available in minutes to hours. |

And, while we’re comparing concepts, here’s another distinction to be made:

Real-Time Data vs Streaming Data

- Real-time data is defined by requirements of maximum tolerance of time to response–typically sub-milliseconds to seconds. For example, a stock trading app requires instant data updates.

- Streaming data is defined as continuous data ingestion and doesn’t specify time constraints on time to response. For example, a sales dashboard for management could use streaming data but be fine with near real-time updates.

Challenges

There are a number of challenges in implementing real-time data in your organization. Most are due to the character of the streaming realtime data itself, which flows continuously at high velocity and volume and is often volatile, heterogeneous and incomplete.

Latency. Real-time data quickly loses its relevance and value so your real-time data processing usually can’t afford more than a second of latency.

Fault Tolerance. Real-time data is continually flowing and your downstream applications often rely on this constant flow to perform. So, your data pipeline must prevent disruptions while managing data flows in a variety of formats from many sources.

Scalability. Your data volume can spike quickly and vary greatly over time so your system should be engineered to handle these fluctuations.

Event Ordering. To support your downstream applications and resolve data discrepancies, you need to know the sequence of events in the data stream.

Cost. Your legacy systems will not support the demands of data processing in real-time. So, you’ll need to invest in a new set of tools that can perform instant analysis on continually flowing data. However, the benefits of implementing a real-time data architecture result in a positive ROI over the long term.

DataOps for Analytics

-

Real-Time Data Streaming (CDC)

Extend enterprise data into live streams to enable modern analytics and microservices with a simple, real-time and universal solution. -

Agile Data Warehouse Automation

Quickly design, build, deploy and manage purpose-built cloud data warehouses without manual coding. -

Managed Data Lake Creation

Automate complex ingestion and transformation processes to provide continuously updated and analytics-ready data lakes.