What is ELT?

What it is, how it works and benefits. This guide provides definitions, use case examples and practical advice to help you understand ELT.

ELT GUIDE

What is ELT?

ETL stands for “Extract, Load, and Transform” and describes the set of data integration processes to extract data from one system, load it into a target repository, and then transform it for downstream uses such as business intelligence (BI) and big data analytics.

How ELT Works

ELT and cloud-based data warehouses and data lakes are the modern alternative to the traditional ETL pipeline and on-premises hardware approach to data integration. ELT and cloud-based repositories are more scalable, more flexible, and allow you to move faster.

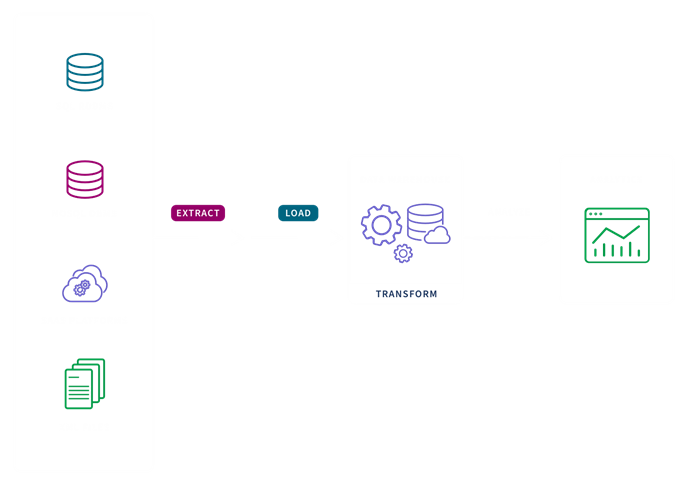

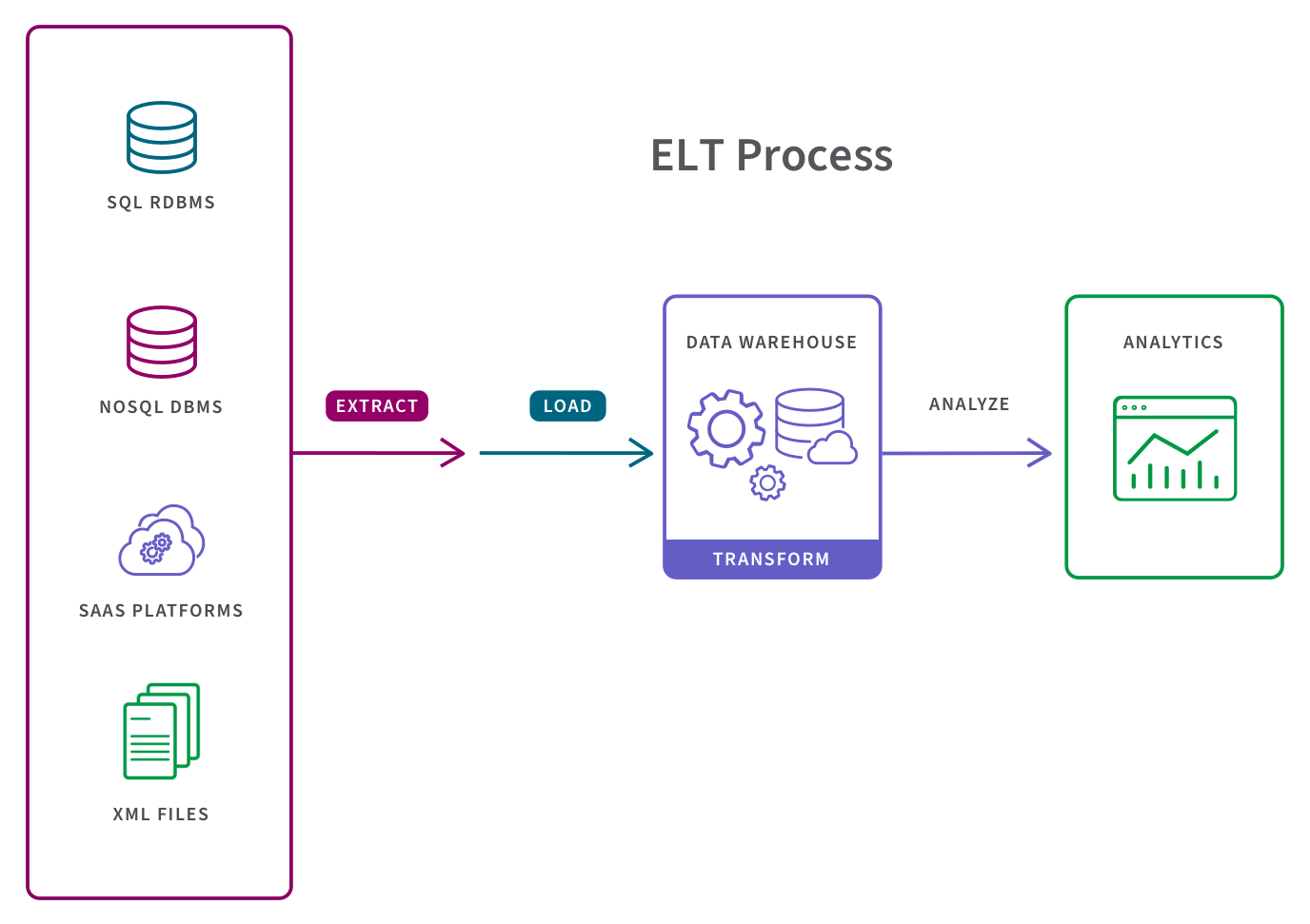

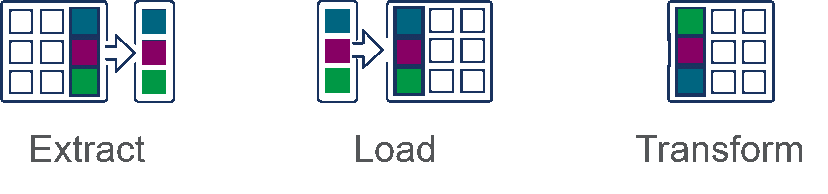

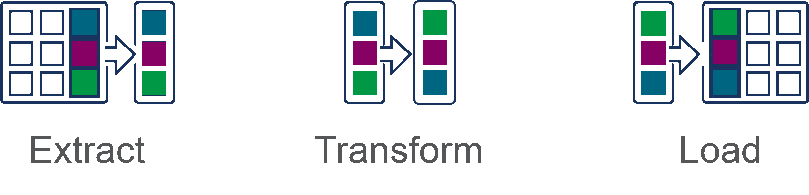

The ELT process is broken out as follows:

- Extract. A data extraction tool pulls data from a source or sources such as SQL or NoSQL databases, cloud platforms or XML files. This extracted data is often stored temporarily in a staging area in a database to confirm data integrity and to apply any necessary business rules.

- Load. The second step involves placing the data into the target system, typically a cloud data warehouse, where it is ready to be analyzed by BI tools or data analytics tools.

- Transform. Data transformation refers to converting the structure or format of a data set to match that of the target system. Examples of transformations include data mapping, replacing codes with values and applying concatenations or calculations.

ELT vs ETL

The main difference between the two processes is how, when and where data transformation occurs. The ELT process is most appropriate for larger, nonrelational, and unstructured data sets and when timeliness is important. The ETL process is more appropriate for small data sets which require complex transformations.

Today, your business has to process many types of data and a massive volume of data. This can be a significant challenge for the traditional ETL pipeline and on premises data warehouses.

Extract > Load > Transform (ELT)

In the ELT process, data transformation is performed on an as-needed basis within the target system. This means that this process takes less time. But if there is not sufficient processing power in the cloud solution, transformation can slow down the querying and analysis processes. This is why this process is more appropriate for larger, structured and unstructured data sets and when timeliness is important.

Extract > Transform > Load (ETL)

In the ETL process, transformation is performed in a staging area outside of the data warehouse and before loading it into the data warehouse. The entire data set must be transformed before loading, so transforming large data sets can take a lot of time up front. The benefit is that analysis can take place immediately once the data is loaded. This is why this process is appropriate for small data sets which require complex transformations.

Building and maintaining a data warehouse can require hundreds or thousands of ETL tool programs. As a result, building data warehouses with ETL tools can be time-consuming, cumbersome, and error-prone — introducing delays and unnecessary risk into BI projects that require the most up-to-date data, and the agility to react quickly to changing business demands.

More resources:

- Learn more about the ETL process

- See a side-by-side review of 10 key areas in the ETL vs ELT Comparison Matrix.

- Watch the brief video below to learn why the market is shifting.

ETL or ELT?

Times are changing. Download the eBook to learn how to choose the right approach for your business, what ELT delivers that ETL can’t, and how to build a real-time data pipeline with ELT.

Key Benefits

ELT makes the process of managing your big data and cloud data warehouse more efficient and effective. This lets you focus on analyzing your data to uncover actionable insights. Specifically, the key benefits are:

- Flexibility. The ELT process allows you to replicate all of your raw data and thereby quickly transform your raw data on demand as needed for data analytics, BI or other systems.

- Scalability. Modern cloud data warehouses, data lakes and data lakehouses allow you scale to leverage all your raw data.

- Speed. The ELT process typically involves loading all your data into a cloud repository. These cloud services allow you to quickly transform and process massive data sets.

- Lower cost. Unlike ETL pipelines which require an investment in on-premise hardware, cloud-based ELT usually offers you a lower total cost of ownership given that it is priced on a pay-as-use service model.

ELT Tools

New ELT tools are known as data warehouse automation and transformation platforms, which automate the repetitive, labor-intensive tasks associated with integration and data warehousing.

Data warehouse automation eliminates error-prone manual coding and automates the entire data warehousing lifecycle from design and development to impact analysis and change management. These automation tools automatically generate the commands, data warehouse structures, and documentation necessary for designing, building, and maintaining your data warehouse program, helping you save time, reduce cost, and reduce project risk.

Seamless integration with a real-time event capture and data integration solution enables real-time ELT by combining real-time source data integration with automated generation—and supports a wide ecosystem of heterogeneous data sources including relational, legacy, and NoSQL data stores.

DataOps for Analytics

Modern data integration delivers real-time, analytics-ready and actionable data to any analytics environment, from Qlik to Tableau, Power BI and beyond.

-

Real-Time Data Streaming (CDC)

Extend enterprise data into live streams to enable modern analytics and microservices with a simple, real-time and universal solution. -

Agile Data Warehouse Automation

Quickly design, build, deploy and manage purpose-built cloud data warehouses without manual coding. -

Managed Data Lake Creation

Automate complex ingestion and transformation processes to provide continuously updated and analytics-ready data lakes.