Data Fabric

What it is, why you need it, and best practices. This guide provides definitions and practical advice to help you understand and establish a data fabric architecture.

Data Fabric Guide

What Is Data Fabric?

Data fabric refers to a machine-enabled data integration architecture that utilizes metadata assets to unify, integrate, and govern disparate data environments. By standardizing, connecting, and automating data management practices and processes, data fabrics improve data security and accessibility and provide end-to-end integration of data pipelines and on premises, cloud, hybrid multicloud, and edge device platforms.

Why Is It Important?

You’re probably surrounded by large and complex datasets from many different and unconnected sources—CRM, finance, marketing automation, operations, IoT/product, even real-time streaming data. Plus, your organization may be spread out geographically, have complicated use cases, or complex data issues such as storing data across cloud, hybrid multicloud, on premises, and edge devices.

A data fabric architecture will help you bring together data from these different sources and repositories and transform and process it using machine learning to uncover patterns. This gives you a holistic picture of your business and lets you explore and analyze trusted, governed data. Ultimately, this helps you uncover actionable insights that improve your business.

Here are the key benefits of adopting this concept for your organization:

- Break down data silos and achieve consistency across integrated environments through the use of metadata management, semantic knowledge graphs, and machine learning.

- Create a holistic view of your business to give business users, analysts, and data scientists the power to find relationships across systems.

- Maximize the power of hybrid cloud and reduce development and management time for integration design, deployment, and maintenance by simplifying the infrastructure configuration.

- Make it easier for business users to explore and analyze data without relying on IT.

- Making all data delivery approaches available through support for ETL batches, data virtualization, change data capture, streaming, and APIs.

- Make data management more efficient through the use of automation for mundane tasks such as aligning schema to new data sources and profiling datasets.

Data Fabric Architecture

A data fabric facilitates a distributed data environment where data can be ingested, transformed, managed, stored and accessed for a wide range of repositories and use cases such as BI tools or operational applications. It achieves this by employing continuous analytics over current and inferenced metadata assets to create a web-like layer which integrates data processes and the many sources, types, and locations of data. It also employs modern processes such as active metadata management, semantic knowledge graphs, and embedded machine learning and AutoML.

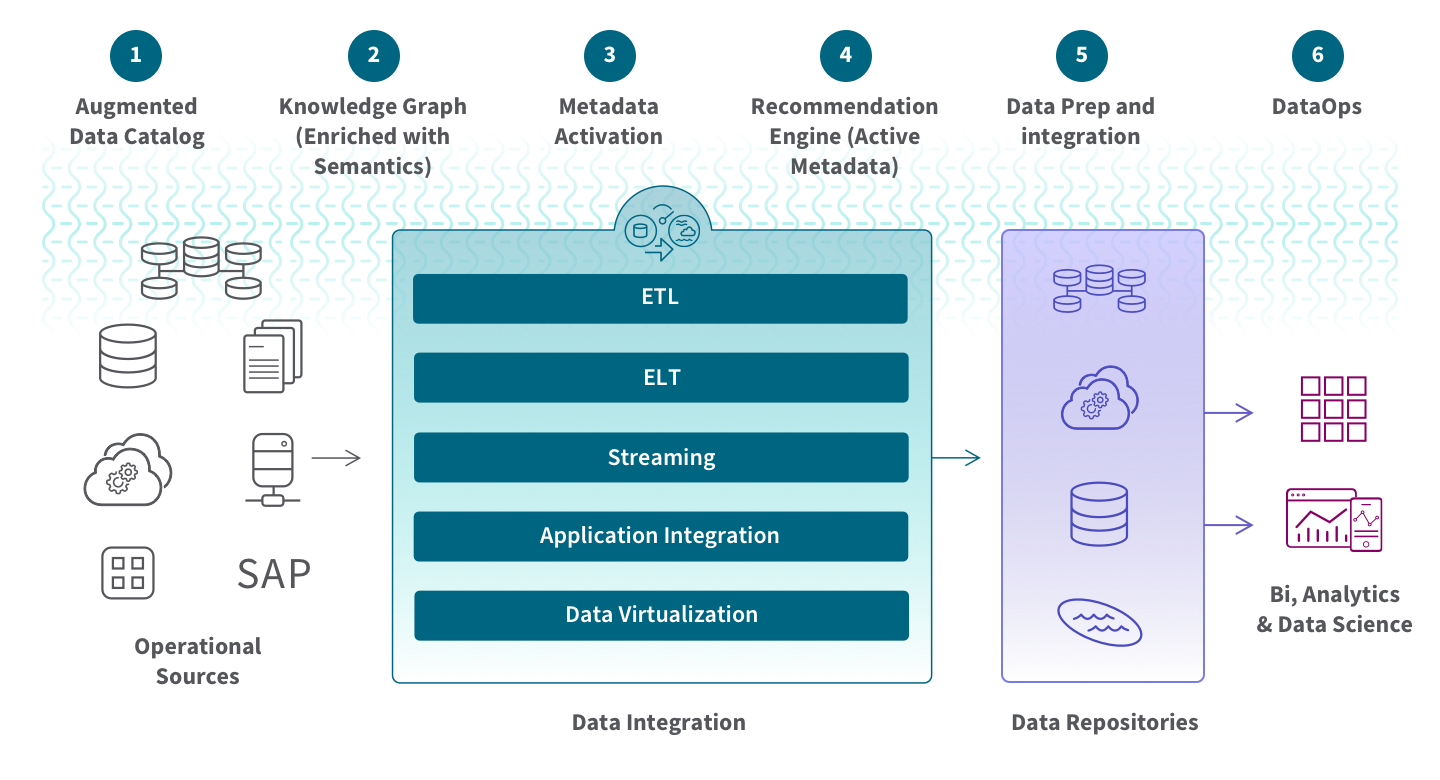

Digging in a bit deeper, let’s first discuss six factors that distinguish a data fabric from a standard data integration ecosystem:

- Augmented data catalog. Your data catalog will include and analyze all types of metadata (structural, descriptive, and administrative) in order to provide context to your information.

- Knowledge graph. To help you and the AI/ML algorithms interpret the meaning of your data, you will build and manage a knowledge graph that formally illustrates the relationships between entities in your data (concepts, objects, events, etc.). And it should be enhanced with unified data semantics, which describes the meaning of data components themselves.

- Metadata activation. You will switch from manual (passive) metadata to automatic (active) metadata. Active metadata management leverages machine learning to allow you to create and process metadata at massive scale.

- Recommendation engine. Based on your active metadata, AI/ML algorithms will continuously analyze, learn, and make recommendations and predictions about your data integration and management ecosystem.

- Data prep & ingestion. All common data preparation and delivery approaches will be supported, including the five key patterns of data integration: ETL, ELT, data streaming, application integration, and data virtualization.

- DataOps. Bring your DevOps team together with your data engineers and data scientists to ensure that your fabric supports the needs of both IT and business users.

Also seen on the diagram above, as data is provisioned from sources to consumers, a data fabric brings together data from a wide variety of systems sources across your organization including operational data sources and data repositories such as your warehouse, data lakes, and data marts. This is one reason why data fabric is appropriate for data mesh design.

The data fabric supports the scale of big data for both batch processes and real-time streaming data, and it provides consistent capabilities across your cloud, hybrid multicloud, on premises, and edge devices. It creates fluidity across data environments and provides you a complete, accurate, and up-to-date dataset for analytics, other applications, and business processes. It also reduces time and expense by providing pre-packaged components and connectors to stitch everything together. This way you don’t have to manually code each connection.

Your specific data fabric architecture will depend on your specific data needs and situation. But, according to the research firm Forrester, there are six common layers for modern enterprise data fabrics:

- Data management provides governance and security

- Data ingestion identifies connections between structured and unstructured data

- Data processing extracts only relevant data

- Data orchestration cleans, transforms, and integrates data

- Data discovery identifies new ways to integrate different data sources

- Data access enables users to explore data via analytic and BI tools based access permissions

Manage Quality and Security in the Modern Data Analytics Pipeline

Data Fabric Implementation

There is not currently a single, stand-alone tool or platform you can use to fully establish a data fabric architecture. You’ll have to employ a mix of solutions, such as using a top data management tool for most of your needs and then finishing out your architecture with other tools and/or custom-coded solutions.

For example, implementing a data fabric architecture with an integration platform as a service (iPaaS) requires a comprehensive approach that emphasizes creating a unified and standardized layer of data services while also prioritizing data quality, governance, and self-service access.According to research firm Gartner, there are four pillars to consider when implementing:

- Collect and analyze all types of metadata

- Convert passive metadata to active metadata

- Create and curate knowledge graphs that enrich data with semantics

- Ensure a robust data integration foundation

In addition to these pillars, you’ll need to have in place the typical elements of a robust data integration solution. This includes the mechanisms for collecting, managing, storing, and accessing your data. Plus, having a proper data governance framework which includes metadata management, data lineage, and data integrity best practices.

DataOps for Analytics

Modern data integration delivers real-time, analytics-ready and actionable data to any analytics environment, from Qlik to Tableau, Power BI and beyond.

-

Real-Time Data Streaming (CDC)

Extend enterprise data into live streams to enable modern analytics and microservices with a simple, real-time and universal solution. -

Agile Data Warehouse Automation

Quickly design, build, deploy and manage purpose-built cloud data warehouses without manual coding. -

Managed Data Lake Creation

Automate complex ingestion and transformation processes to provide continuously updated and analytics-ready data lakes.