Data Exploration

What it is, why it matters, and best practices. This guide provides definitions and practical advice to help you understand and practice modern data exploration.

What is Data Exploration?

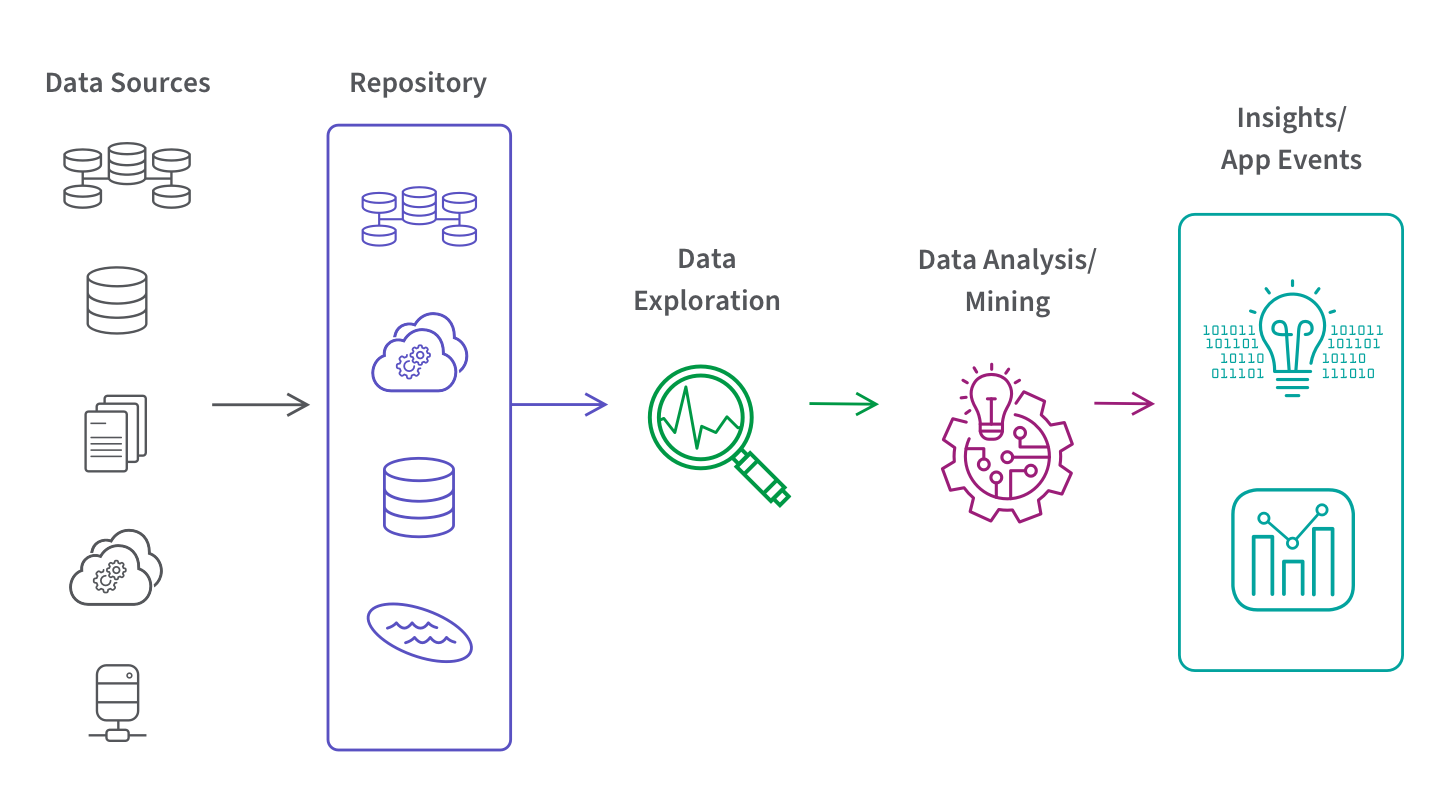

Data exploration refers to the process of reviewing a raw dataset to uncover characteristics and initial patterns for further analysis. As the first step of data analysis, data visualization tools and statistical techniques help you understand the size and quality of your data, detect outliers or anomalies, and identify potential relationships among data elements, files, and tables.

Why is Data Exploration Important?

It’s extremely difficult (if not impossible) to manually review thousands of rows of data elements to get a comprehensive view of your dataset. Especially when you have large, unstructured datasets and your data comes from multiple sources.

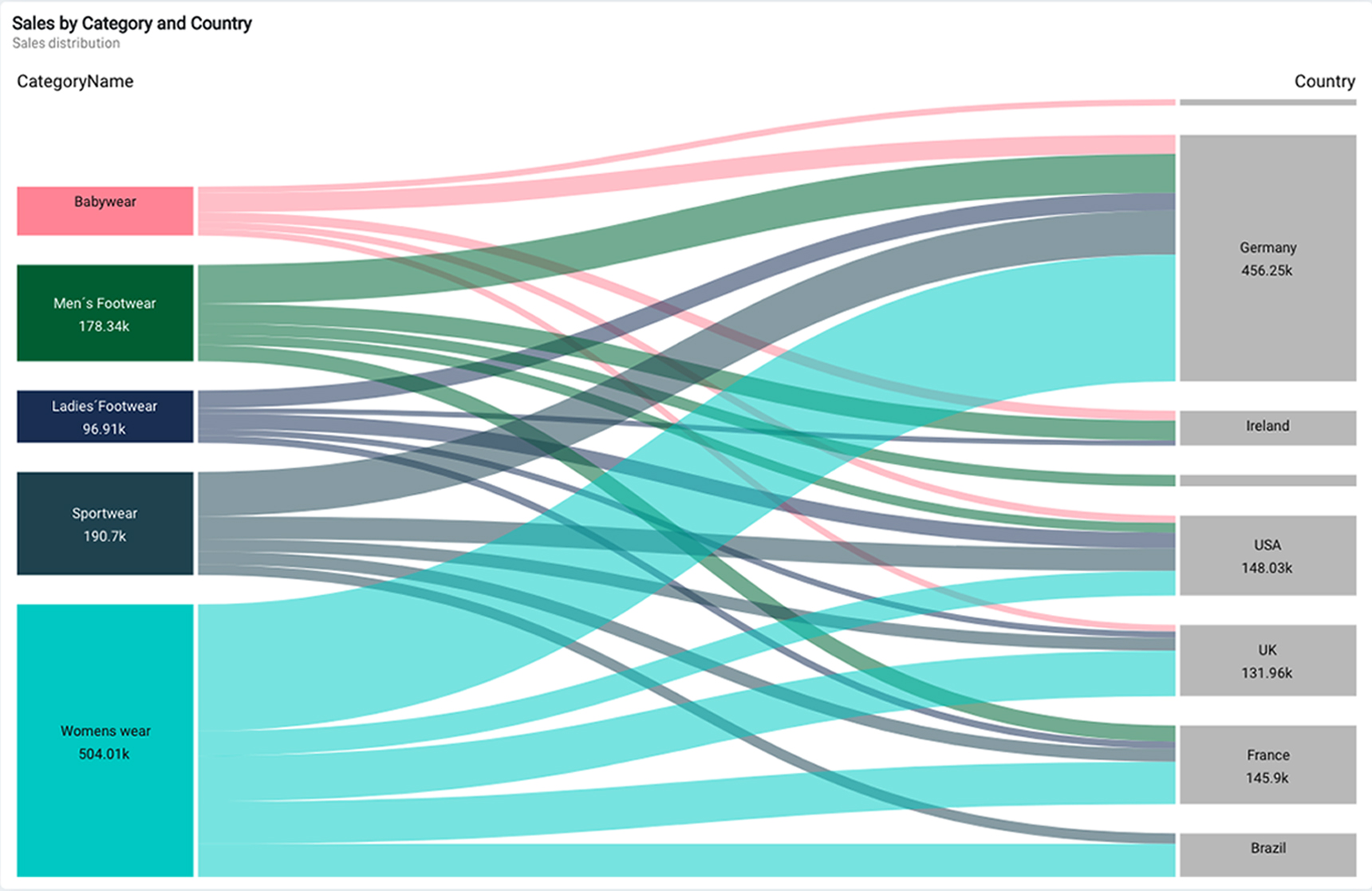

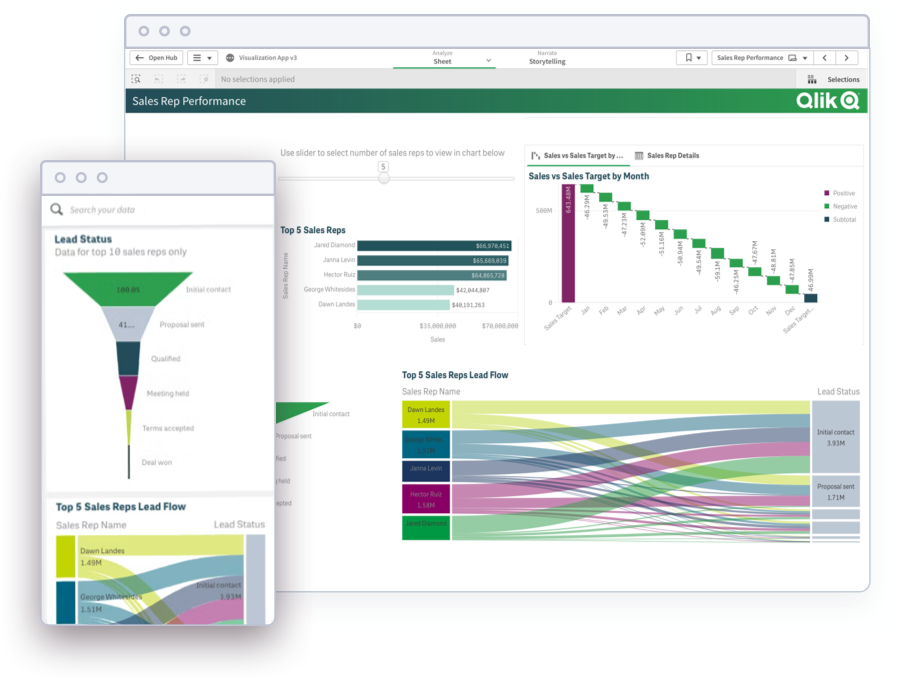

Data exploration tools and processes provide a visual representation of your dataset in charts, graphs, maps or other visual formats. This leverages the innate human ability to recognize patterns in data that may not be captured by analytics tools. You can then make better decisions on data cleansing, defining the metadata, and choosing where to focus further, deeper analysis.

The best tools offer a user-friendly interface and AI-assists which make it easy for anyone in your organization to do this in a governed way, improving data literacy and encouraging self-service analytics.

10 Ways to Take Your Visualizations to the Next Level

Data Exploration vs Data Mining

The terms data exploration and data mining are sometimes used interchangeably. While they’re both methods for understanding large datasets, here are three key differences:

1) Stage in the Analytics/Data Science Process.

Typically, you explore data before data mining. This is because you need to first get a comprehensive view of your dataset and identify potential relationships between variables before you dive into deeper analysis, AutoML, or building custom data models and algorithms.

2) Level of Expertise Required.

Modern data visualization tools enable citizen data scientists to explore data. Citizen data scientists are people who rely on getting insights from data but have no formal training in analytics or data science. On the other hand, data mining is typically performed by data scientists and analysts who build machine learning models which find patterns in your data by applying algorithms.

3) Nature of the Data.

Exploring the data typically examines unstructured or semi-structured data whereas data mining is usually performed on structured data.

Data Exploration Tools

Manual Analysis.

Historically, to explore big data, you’d need to write statistical analysis scripts in R, create machine learning algorithms in Python, and/or manually filter data in a spreadsheet. If you have the data science expertise and the time to do this, then manual analysis is still an option.

Automated.

Today’s visualization software and BI tools make it easy for you to integrate disparate data sources and perform advanced techniques such as regression, univariate, bivariate, multivariate, and principal components analysis. These tools also allow you to monitor your data sources, collaborate with others, and share findings in interactive data dashboards. The best tools even integrate AutoML capabilities which simplify building custom machine learning models.

You can also consider using open source tools such as Pentaho, R programming, Knime, NodeXL, RapidMiner, and OpenRefine. Be aware that open source software can be tricky to set up and use and it can expose you to security risk.

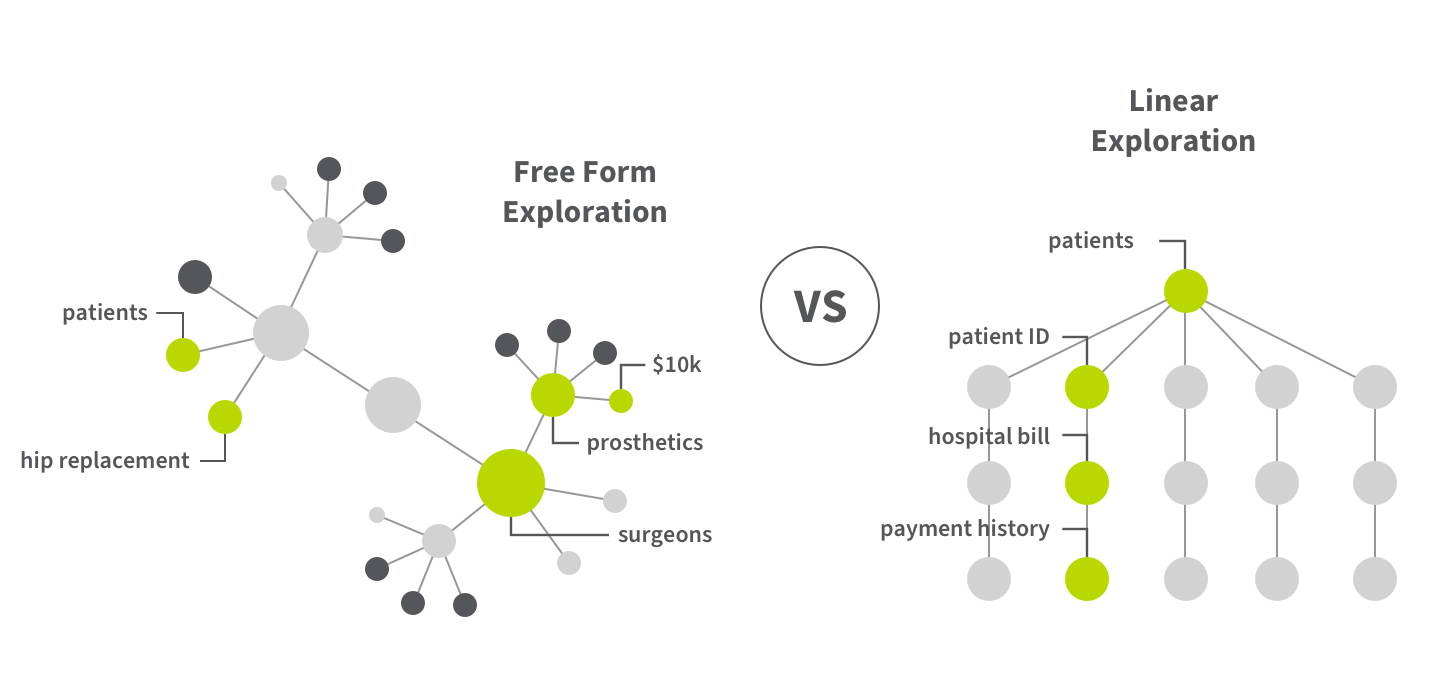

Associative vs Linear Exploration.

Whether you’re conducting data science or data analytics, your tool should allow you to freely explore all your data, in any direction, directly from within the visualizations you produce. Look for an associative data engine that lets you uncover connections you might not have thought to query or just can’t find at all with a query-based BI tool. Tools with a query-based architecture limit you to simple filtering within partial subsets of data.

Machine Learning and AutoML

Machine learning and AutoML (automated machine learning) can be applied to exploring the data, and it’s especially appropriate for big data. To ensure the accuracy of your ML model, here are six critical steps you should take in advance:

- Clearly define the question you’re trying to answer. This will inform your data requirements.

- Gather and prep the appropriate data. Data prep includes ensuring your dataset is correctly labeled and formatted, avoiding data leakage and training-serving skew, and cleaning up any incomplete, missing, or inconsistent data.

- Identify and define all variables in your dataset.

- Perform univariate analysis. You can use a histogram, scatter plot, or box plot for single variables and use a bar chart for variables that can be grouped by category.

- Conduct bivariate analysis. You can use a visualization tool to find relationships between pairs of variables.

- Account for outliers and missing values.

AutoML (short for automated machine learning) refers to the tools and processes which make it easy for you to build, train, deploy and serve custom machine learning models. AutoML provides both ML experts and citizen data scientists a simple, code-free experience to generate models, make predictions, and test business scenarios. This lets you apply machine learning across your organization.

Data scientists use AutoML to avoid a cumbersome trial-and-error workflow process and instead put their energy toward customizing models and notebooks.

Learn How to Get Started

Download the AutoML guide with 5 factors for machine learning success

Modern Analytics Demo Videos

See how to explore information and quickly gain insights.

- Combine data from all your sources

- Dig into KPI visualizations and dashboards

- Get AI-generated insights