Data Quality

What it is, why you need it, and best practices. This guide provides definitions and practical advice to help you understand and maintain data quality.

What is Data Quality?

Data quality assesses the extent to which a dataset meets established standards for accuracy, consistency, reliability, completeness, and timeliness. High data quality ensures that the information is trustworthy and suitable for analysis, decision-making, reporting, or other data-driven activities.

Data quality management involves ongoing processes to identify and rectify errors, inconsistencies, and inaccuracies. It should be a key element of your data governance framework and your broader data management system.

Why It’s Important

Data quality is essential because it underpins informed decision-making, reliable reporting, and accurate analysis. Bad data can lead to errors, misinterpretations, and misguided decisions, potentially causing financial losses and reputational damage. Reliable data enables you to have confidence in your business intelligence insights, leading to better strategic choices, improved operational efficiency, and enhanced customer experiences.

Data Quality Dimensions

Data quality assessment involves multiple dimensions, which can vary based on your data sources. These dimensions serve to categorize data quality metrics, which help you perform an assessment.

The top 6 dimensions most organizations track:

-

Accuracy ensures correct values based on your single "source of truth." Designating a primary data source and cross-referencing others enhances accuracy.

-

Consistency compares data records from diverse datasets. This confirms data trends and behaviors across sources for reliable insights.

-

Completeness reflects the amount of data that is usable. A high absence rate might misrepresent typical data samples, leading to biased analysis.

-

Timeliness addresses data readiness within expected timeframes. Real-time generation, like immediate order numbers, is essential.

-

Uniqueness measures duplicate data volume. For instance, customer data should have distinct customer IDs.

-

Validity gauges data alignment with business rules, including metadata management like valid data types, ranges, and patterns.

Additional dimensions you can track:

- Reliability refers to the degree to which data can be trusted to be consistent and dependable over time.

- Relevancy confirms that your data aligns with your business needs. This dimension is complex, especially for emerging datasets.

- Precision measures the level of detail or granularity in data, ensuring that it accurately represents the intended level of specificity.

- Understandability refers to the clarity and ease with which data can be comprehended by users, avoiding confusion and misinterpretation.

- Accessibility measures the availability and ease of access to data for authorized users, ensuring data is available when needed.

Manage Quality and Security in the Modern Data Analytics Pipeline

Use Cases

The below use cases highlight the critical role of data standards in diverse industries and applications, impacting decision-making, operational efficiency, and customer experiences.

-

Customer Relationship Management (CRM): Ensuring accurate and complete customer data in a CRM system, such as valid contact information and purchase history, to enable effective communication and personalized interactions.

-

Financial Reporting: Verifying the accuracy and consistency of financial data across various reports and systems to ensure compliance with regulatory requirements and provide reliable insights for decision-making.

-

Healthcare Analytics: Validating medical data for accuracy, completeness, and consistency in electronic health records to improve patient care, treatment outcomes, and medical research.

-

Supply Chain Optimization: Ensuring the accuracy and timeliness of supply chain data, such as shipping and delivery information, to streamline operations and enhance supply chain efficiency.

-

Fraud Detection: Identifying anomalies and inconsistencies in transaction data to detect potential fraudulent activities, safeguarding financial systems and assets.

-

Marketing Campaigns: Using high-quality demographic and behavioral data to target marketing campaigns effectively, ensuring messages reach the right audience and improving campaign ROI.

-

Machine Learning Models: Feeding accurate and consistent data into machine learning or AI models to enhance their performance and generate more reliable predictions and insights.

-

E-commerce Inventory Management: Maintaining precise and up-to-date inventory data to prevent stockouts, minimize overstock situations, and enhance customer satisfaction.

Best Practices

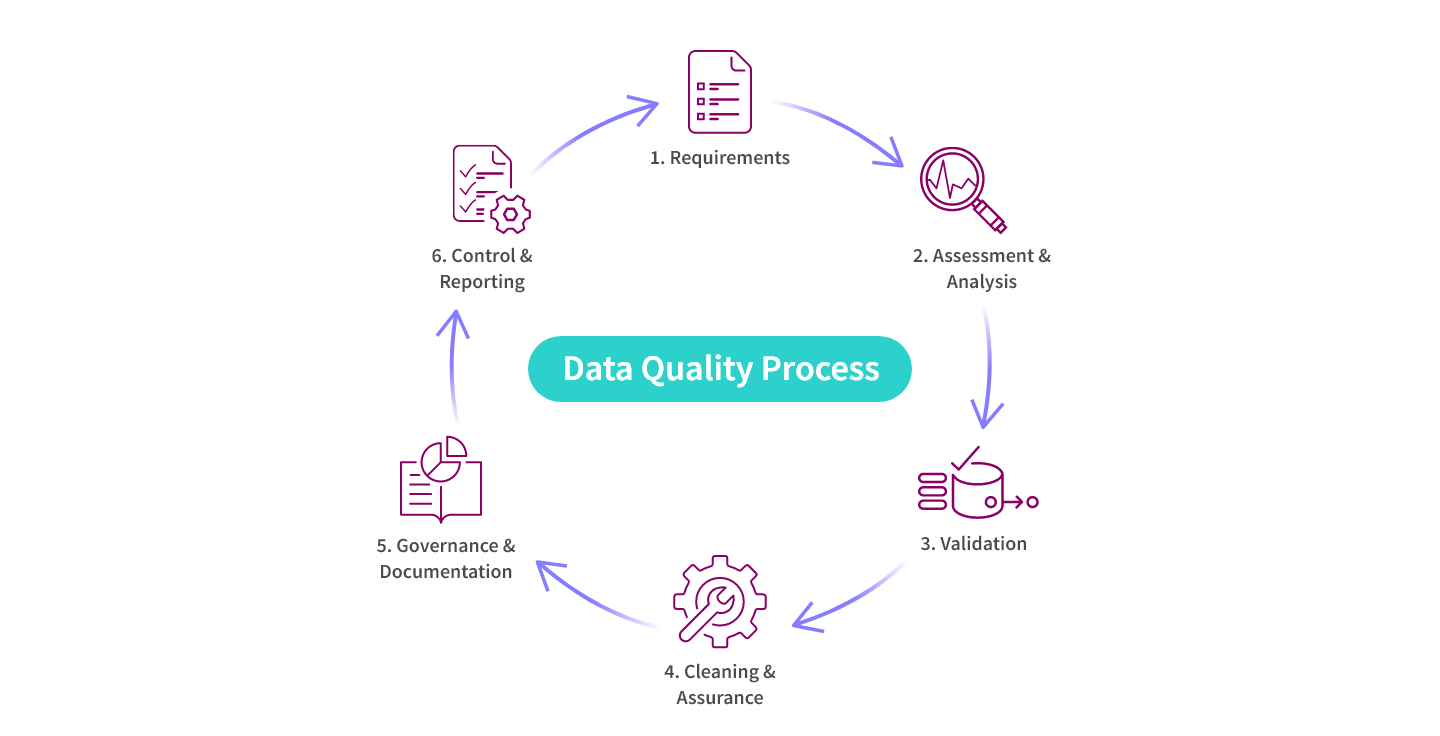

The data quality process encompasses a range of strategies to ensure accurate, reliable, and valuable data throughout the data lifecycle.

Here are some key steps to follow:

-

Requirements Definition

- Establish clear quality standards and criteria for high-quality data based on business needs and objectives.

-

Assessment and Analysis

- Perform data exploration, profiling, and analysis to understand data characteristics, identify anomalies, and assess overall quality.

-

Data Validation

- Implement validation rules during data entry and integration to ensure data conforms to predefined formats, standards, and business rules.

-

Data Cleansing and Assurance

- Employ quality assurance measures, including cleaning, updating, removing duplicates, correcting errors, and filling in missing values.

-

Data Governance and Documentation

- Develop a robust data governance framework to oversee quality, establish ownership, and enforce policies.

- Maintain comprehensive records for data sources, transformations, and quality procedures.

- Ensure data lineage by creating a data mapping framework that collects and manages metadata from each step in its lifecycle.

-

Control and Reporting

- Data Quality Control: Use automated tools for continuous monitoring, validation, and data standardization to ensure ongoing accuracy.

- Monitoring and Reporting: Regularly track quality metrics and generate progress reports.

- Continuous Improvement: Treat data quality as an ongoing process, continually refining practices.

- Collaboration: Foster collaboration between IT, data management, and business units for enhanced quality.

- Standardized Data Entry: Implement processes to reduce errors during data collection.

- User Training: Educate users about data quality and proper handling practices.

- Feedback Loops: Establish mechanisms for users to report issues and improve data.

- Data Integration: Ensure consistency during integration through attribute mapping.

- Stewardship: Appoint data stewards responsible for monitoring, maintaining, and improving quality.

Challenges

There are many challenges associated with this process. Overcoming these challenges demands a combination of technical solutions, organizational commitment, and a holistic approach. Here are some of the challenges you may face:

- Incomplete or Inaccurate Data: Data may be missing values, contain errors, or lack necessary details, leading to incomplete or inaccurate insights.

- Data Silos: Disparate systems and departments can lead to isolated pockets of data, making it difficult to ensure consistency and accuracy across the organization.

- Data Integration Complexity: Merging data from various sources can introduce inconsistencies and require significant effort to align and clean the data.

- Changing Data Formats: As data sources evolve, changes in formats, structures, and definitions can lead to inconsistencies and difficulties in maintaining standards.

- Limited Data Governance: Insufficient oversight and governance can lead to unclear roles, responsibilities, and accountability.

- Lack of Standardization: Inconsistent data entry practices and varying data definitions can result in incompatible and unreliable data.

- Data Volume and Velocity: Managing and maintaining standards becomes more challenging as data volumes increase, particularly in real-time or high-velocity data streams.

- Poor Data Entry Practices: Manual data entry errors, duplicate records, and outdated information can negatively impact quality.

- Legacy Systems: Older systems might lack the capabilities to enforce necessary measures or seamlessly integrate with modern data quality tools.

- Cultural Challenges: Resistance to adopting key practices and a lack of awareness about its importance can hinder effective quality management.

- Resource Constraints: Inadequate budget, time, and skilled personnel can limit an organization's ability to implement comprehensive initiatives.

- Continuous Monitoring: Regularly monitoring and maintaining quality requires ongoing effort, and lapses can lead to degradation over time.

- Data Privacy and Security Concerns: Ensuring quality while safeguarding sensitive information requires a delicate balance to avoid compromising privacy and security.

- Data Migration: During data migration or system upgrades, maintaining standards can be complex, potentially leading to data loss or degradation.

- Complex Data Ecosystems: In environments with diverse data sources, data pipelines, and analytics tools, maintaining consistent quality can be challenging.

Data Quality vs Data Integrity

Data quality ensures data is accurate, complete, and suitable for its purpose. Data integrity focuses on preventing unauthorized changes and maintaining data accuracy throughout its lifecycle. While data quality encompasses many aspects of data, data integrity specifically safeguards against alterations or corruption.

DataOps for Analytics

Modern data integration delivers real-time, analytics-ready and actionable data to any analytics environment, from Qlik to Tableau, Power BI and beyond.

-

Real-Time Data Streaming (CDC)

Extend enterprise data into live streams to enable modern analytics and microservices with a simple, real-time and universal solution. -

Agile Data Warehouse Automation

Quickly design, build, deploy and manage purpose-built cloud data warehouses without manual coding. -

Managed Data Lake Creation

Automate complex ingestion and transformation processes to provide continuously updated and analytics-ready data lakes.