Data Migration

What it is, types and process. This guide provides definitions and practical advice to help you better understand data migration.

DATA MIGRATION GUIDE

What is Data Migration?

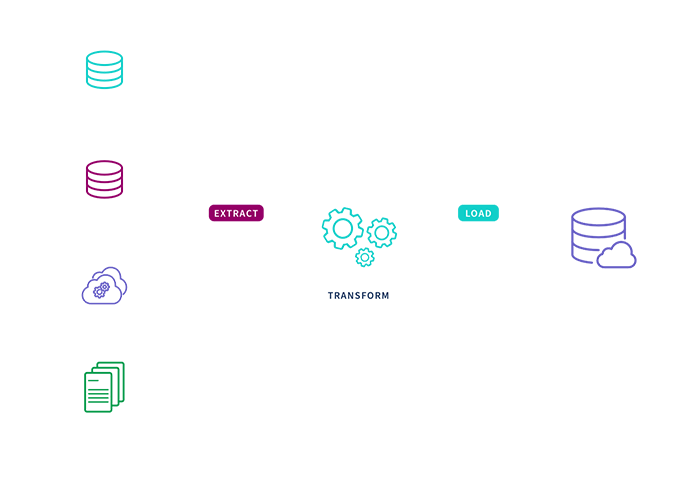

Data migration is the process of moving data between storage systems, applications, or formats. Typically a one-time process, it can include prepping, extracting, transforming and loading the data. A data migration project can be initiated for many reasons, such as upgrading databases, deploying a new application or switching from on-premises to cloud-based storage and applications.

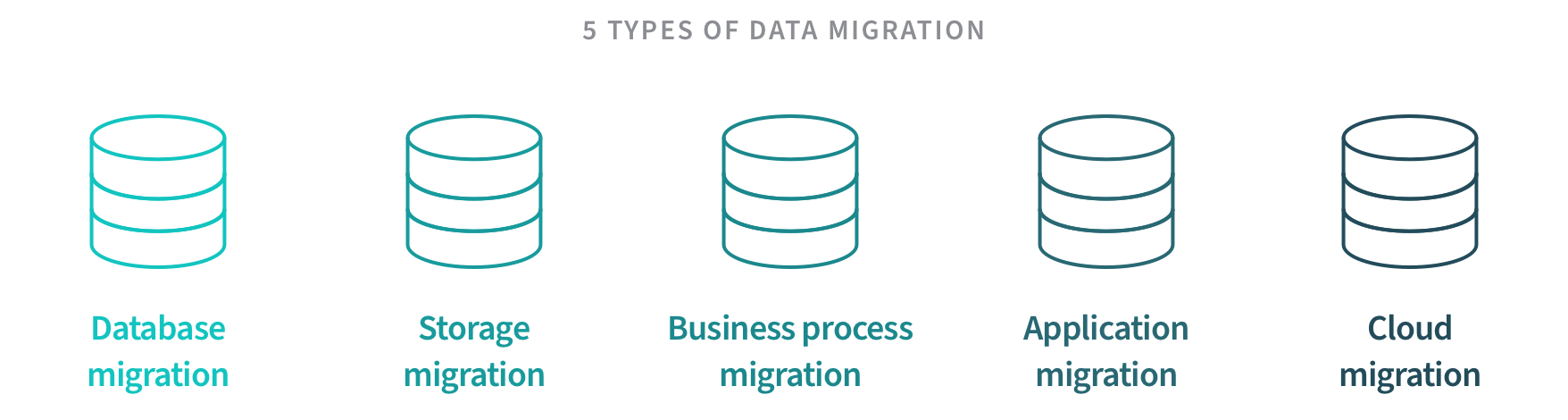

5 Types of Data Migration

Your specific business requirements will determine the type of data migration process you undertake. Some projects, such as transferring to a new CRM system, will require you to involve teams which rely on that application. Others, like moving from one database vendor to another, can happen without involvement of the rest of the business.

Database migration can refer to either moving data from one database vendor to another, or to upgrading your database software to a newer version. The data format can vary between vendors so a transformation process may be required. In most cases, a change in database technology should not affect the application layer but you should definitely test to confirm.

Storage migration involves transferring data from an existing repository to another, often new repository. The data usually remains unchanged during a storage migration. The goal is typically to upgrade to more modern technology which scales data more cost-effectively and processes data faster.

Business process migration involves the transfer of databases and applications containing data related to customers, products, and operations. Data often requires transformation as it moves from one data model to another. These projects are usually triggered by a company reorganization, merger or acquisition.

Application migration refers to moving a software application such as an ERP or CRM system from one computing environment to another. The data usually requires transformation as it moves from one data model to another. This process most often occurs when the company decides to change to a new application vendor and/or involves transferring from on-premises to a public cloud or moving from one cloud to another.

Cloud migration involves moving data, applications, or other business elements to a cloud computing environment such as a cloud data warehouse. This process can go from a locally hosted data center to the public or from one cloud platform to another. The “cloud exit” process involves migrating data or applications off of the public cloud and back onto an on-premises data center.

Top 4 Strategies for Automating Your Data Pipeline

Data Migration Process

Data migration projects can be stressful since they involve moving critical data and often impact other stakeholders. You’ve heard horror stories of extended system downtime and lost or corrupted data. Which is why you need a well-defined data migration plan before you start moving anything.

Your needs are unique, so not all of the steps described in this section may be necessary for your migration. Plus, this guidance assumes you’re executing the full migration as a one-time event in a limited time window. However, you may choose to execute a more complex, phased approach where both the old and new systems work in parallel.

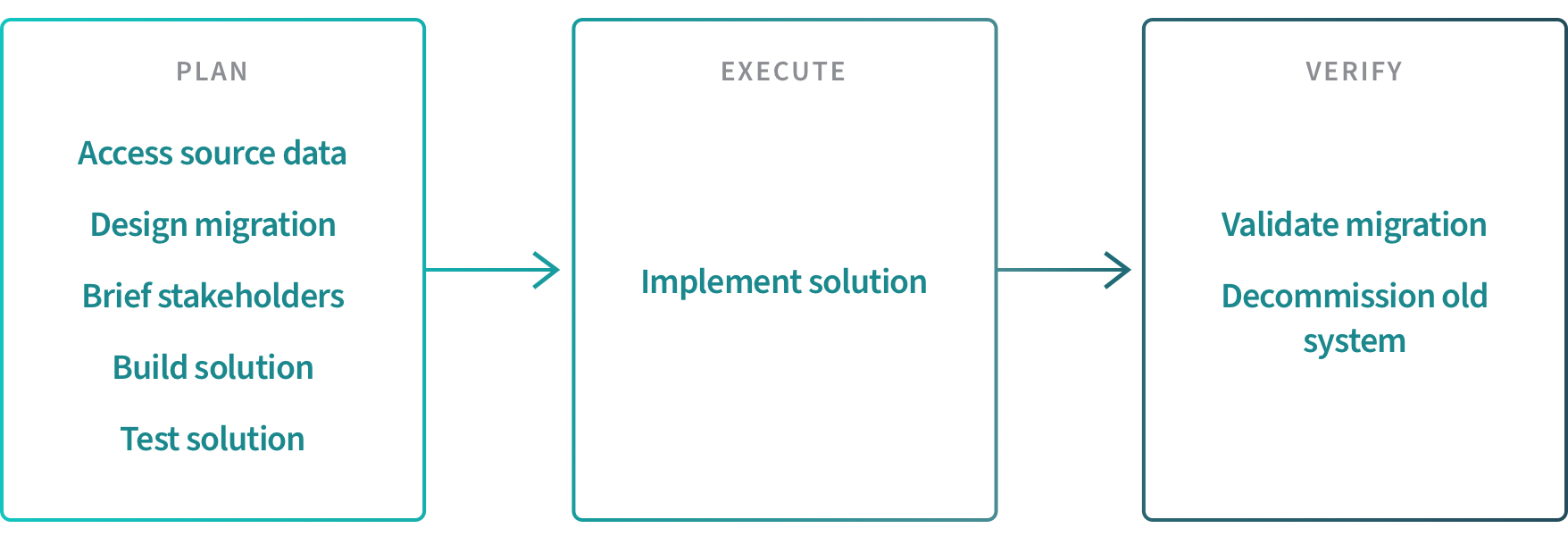

Below we describe the three key phases of the data migration process for most projects: PLAN, EXECUTE and VERIFY.

1. PLAN

This first phase is the most critical. Here you’ll assess and clean your source data, analyze business requirements and dependencies, develop and test your migration scenarios, and codify a formal data migration plan.

- Assess and clean your source data. The first step in your planning phase is to gain a full understanding of the size, stability and format of the data you plan to move. This means performing a complete audit on your source data, looking for inaccurate or incomplete data fields. This audit will also help you in mapping fields to your target system and identifying gaps. After the audit is complete, you’ll obviously need to clean the data, resolving any issues you uncovered.

- Design your migration. Now you’re ready to specify what data will be moved, the details of your project’s technical architecture and processes, plus budget and timelines. Key aspects of this design will be identifying tools and resources needed, setting tight security standards and establishing data quality controls.

- Brief your key stakeholders. With your migration design in hand, you should communicate to all relevant stakeholders the goals of the project, key milestones and any elements which may impact other teams, such as system downtime.

- Build your solution. Finally, you can start coding the migration logic you designed to extract, transform and load data into the new repository. If you’re working with a large dataset, you can consider splitting it into sections and then build and test, section by section.

- Test your solution. Even though you tested repeatedly during the build out, you should definitely test your completed code with a mirror of the production environment before moving to the execute phase.

2. EXECUTE

- Implement your solution. The time has come to execute the migration. This can be a stressful time, especially for stakeholders who are directly impacted, but you’ve thoroughly tested your solution and can now proceed.

3. VERIFY

- Validate your migration. To ensure your migration went as planned, you should conduct data validation testing. Specifically, you’ll want to check if all the required data was transferred, if there are correct values in the destination tables and if there was any data loss.

- Decommission your old systems. The final step in the migration process is to shut down and dispose of the legacy systems which supported your source data. This will result in cost savings and resource efficiencies.

Manage Quality and Security in the Modern Data Analytics Pipeline

Accelerate analytics-ready data and insights with DataOps

-

Real-Time Data Streaming (CDC)

Extend enterprise data into live streams to enable modern analytics and microservices with a simple, real-time and universal solution. -

Agile Data Warehouse Automation

Quickly design, build, deploy and manage purpose-built cloud data warehouses without manual coding. -

Managed Data Lake Creation

Automate complex ingestion and transformation processes to provide continuously updated and analytics-ready data lakes.